Explainable Self-Organizing Artificial Intelligence Captures Landscape Changes Correlated with Human Impact Data

Received Date: January 21, 2024 Accepted Date: February 21, 2024 Published Date: February 24, 2024

doi: 10.17303/jcssd.2024.3.103

Citation: John Mwangi Wandeto, Birgitta Dresp-Langley (2024) Explainable Self-Organizing Artificial Intelligence Captures Landscape Changes Correlated with Human Impact Data. J Comput Sci Software Dev 3: 1-18

Abstract

Novel methods of analysis are needed to help advance our understanding of the intricate interplay between landscape changes, population dynamics, and sustainable development. Self-organized machine learning has been highly successful in the analysis of visual data the human expert eye may not be able to see. Thus, subtle but significant changes in fine visual detail in images relating to trending alterations in natural or urban landscapes, for example, may remain undetected. In the course of time, such changes may be the cause or the consequence of measurable human impact, or climate change. Capturing such change in time series of satellite images before the human eye can detect the signs thereof makes important trend information readily available at early stages to citizens, professionals and policymakers. This promotes change awareness, and facilitates early decision making for action. Here, we use unsupervised Artificial Intelligence (AI) that exploits principles of self-organized biological visual learning for the analysis of time series of satellite images. The Quantization Error (QE) in the output of a Self-Organizing Map prototype is exploited as a computational metric of variability and change. Given the proven sensitivity of this neural network metric to the intensity and polarity of image pixel contrast, and its proven selectivity to pixel colour, it is shown to capture critical changes in urban landscapes. This is achieved here on the example of satellite images from two regions of geographic interest in Las Vegas County, Nevada, USA across the years 1984-2008. The SOM analysis is combined with the statistical analysis of demographic data revealing human impacts significantly correlated with the structural changes in the specific regions of interest. By correlating the impact of human activities with the structural evolution of urban environments we further expand SOM analysis as a parsimonious and reliable AI approach to the rapid detection of human footprint-related environmental change.

Keywords: Landscapes; Urban Environments; Satellite Imaging; Las Vegas; Self Organizing Map (SOM); Quantization Error (QE); Demographic Data; Human Impact Analysis

Introduction

Rapid modification of human environments results in unprecedented environmental challenges that impact sustainability to greater or lesser extents depending on the specific geographic and social context. The study of urban landscape evolution and its correlation with human impact and demographic data helps understand its implications for the development of human activities in these urban ecosystems and their sustainability given the particular geographic context. Novel approaches and methods of analysis are needed to help advance our understanding of the intricate interplay between landscape changes, population dynamics, and sustainable development [1] under the light of a more or less limited availability of natural resources. Subtle changes in changes in earth imaging data may reflect the cause or the consequence of measurable human impact or climate change [2, 3]. Predicting or mapping the risks associated with these by exploiting artificial intelligence (AI) for the analysis of satellite images is an emerging method that can support research, surveillance, prevention and control activities [4]. This often implies resorting to methods of data analysis from several disciplines to address the problems of human impact on landscapes [5]. Recognition of the complexity of the links between human impact data, landscape alterations, and climate change compels researchers to draw on interdisciplinary knowledge that marries the physical and natural sciences with social sciences and humanities. Given the slow unfolding of what may become catastrophic changes to human environments [6, 7], the methods of earth observation image analysis need to be sufficiently sensitive to the smallest detectable changes to permit riskaware monitoring at different scales of time and space. Such highly sensitive methods then can inform the quantitative estimation and prediction of risks as well as risk mapping. The current array of Earth observation satellites provides access to a large quantity and variety of data with the highest possible spatial and temporal resolution [2], which facilitates their subsequent analysis. There are various approaches to the analysis of Earth image data from satellites [8-12] to study temporal changes in landscape data that may represent meaningful phenomena in terms of human impact [13] or climate change [14, 15]. Here, we use a pixel color-based approach to the analysis of Earth imaging data to show that the method consistently captures the earliest signs of structural change in satellite images of two specific geographical regions of interest (ROI) across a time period where critical landscape changes occurred. These changes are further shown to be significantly correlated with human data and demographics from public archives for the same time period, highlighting critical dimensions of human impact on the expansion of built environments and the stresses this exerts on survival relevant natural resources (Figure 1). NASA Landsat images [16] of Las Vegas City Centre and the residential North of Las Vegas from the time period between 1984 and 2008 were submitted to analysis for this study. In the 1980’s Las Vegas City, located in the middle of the Nevada Desert, featured mainly ‘The Strip’, with a number of smaller casinos and motels. Subsequently, in a large restructuration project between 1990 and 2007 a large number of the old casinos and motels were demolished. The ensuing reconstruction of Las Vegas City Centre and the subsequent opening of a large number of mega-resorts with casino spaces, artificial tropical landscapes and urban large-scale simulations, restaurants with world-class chefs, and shows performed by international megastars transformed Las Vegas City Centre and The Strip entirely. By offering multiple kinds of entertainment, dining gambling, and lodging, attracting millions of visitors [17] from all over the world, Las Vegas City Centre has become one of the largest entertainment poles in the world [12]. Most elements of this project opened in late 2009. This was accompanied by the rapid spread of greater Las Vegas, including the residential North, into the adjacent desert. The population count [18] grew from thousands in 1984 to millions in 2009. Given the specific geographic location and the limited natural resources therein, the expanded urbanization of Las Vegas had its consequences on its own sustainability, as demonstrated in a recent study suggesting that conservation and other strategic water supply measures now have become urgently necessary to ensure water supply for Las Vegas in the future [19, 20].

Although the current array of Earth observation satellites such as NASA’s Landsat provides access to a large quantity and variety of data with the highest possible spatial and temporal resolution [2], any technique for studying changes in imaging data across time sooner or later requires choosing a method and a model for an interpretation. This introduces potential sources of bias [21-23], even when the image analysis is combined with an advanced imaging model, which itself has to be explainable. In computer and AI-assisted methods for the analysis of image time series, there are various potential sources of bias [24, 25] at essentially three levels:

(1) Input/training data and/or their configuration [26-28].

(2) Learning algorithms [29, 30].

(3) Interpretation of output data [24, 31].

Also, a meaningful interpretation of the data may involve guesswork when the uncertainty about trends or correlations is high. In short, it is difficult to rule out subjectivity of the human agent at various steps in the process, even when the latter is a skilled expert. In addition, sustainable technology for data classification needs to combine high accuracy with further advantages relative to economy in resource demands, computing speed, objectivity, and output reproducibility. The proposed approach ensures these criteria [32], and excludes human bias at any of the three levels mentioned above. The method consists of exploiting the quantization error (QE) in the output of a SOM [33-35], a self-organizing neural network architecture that maps pixel color input (RGB) from large image data, i.e. images containing up to several millions of pixels, with the highest possible (to the single pixel) precision on the basis of unsupervised, competitive winner-take-all learning [33, 36-38]. The QE in the SOM output, frequently used as a quality metric for network quantization [34, 39, 40], has more recently proven a consistent detector of invisible local changes in streams of thousands or millions of, randomly or systematically varying, input data [32, 41-43]. When the SOM input is constant across time, the quantization error is invariant; when variations in a locally defined dimension of the input data occur across time, the QE will reliably increase or decrease depending on the direction of change. This novel aspect of input-driven self-organization [44-47] can be, as is shown here, strategically exploited in artificial neural network maps with a minimalistic functional design where the model neurons become locally and globally ordered during learning [48, 49]. Unsupervised winner-take-all learning in SOM is akin to biological synaptic learning [45, 50] and the self-organizing functional properties of biological sensory neurons [51]. The output metric as defined provides a highly reliable indicator that scales, in a few minutes and with a to-the-single-pixel precision, local variability in time series of images containing millions of pixels each. This was previously demonstrated on image time series of natural environments [52] organs [53, 54], cells [41, 55, 56], simulated visual objects [57] and temporal series of sensor data [58, 59].

Theoretical Framework

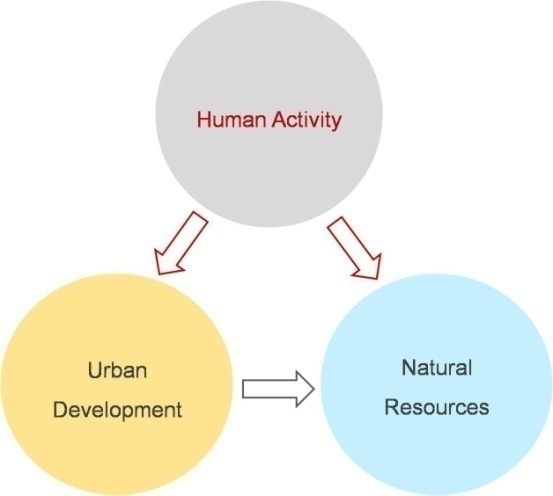

The Self-Organizing Map (SOM) may be described formally as a nonlinear, ordered, smooth mapping of high-dimensional input data onto the elements of a regular, low-dimensional array [33-35]. The general, fully connected functional architecture of the prototype for this study here is graphically represented in Figure 2. It is assumed that the set of input variables can be defined as a real vector x, of n-dimensionality. A parametric real vector mi of n-dimension is associated with each element in the SOM. Vector mi is a model and the SOM is therefore an array of models. Assuming a general distance measure between x and mi denoted by d(x, mi ), the map of an input vector x on the SOM array is defined as the array element mc that matches best (smallest d(x, mi )) with x. During the learning process, the input vector x is compared with all the mi in order to identify mc . The Euclidean distances ||x-mi || define mc . Models topographically close in the map up to a certain geometric distance indicated by hci will activate each other to learn something from their common input x.

This results in a local relaxation or smoothing effect on the models in this neighborhood, which in continuous learning leads to global ordering. Self-organized learning in a SOM is represented by the equation

where t=1,2,3...is an integer, the discrete-time coordinate, hci (t) is the neighborhood function, a smoothing kernel defined over the map points which converges towards zero with time, α(t)is the learning rate, which also converges towards zero with time and affects the amount of learning in each model. At the end of the winner-take-all learning process in the SOM, each image input vector x becomes associated to its best matching model on the map mc. The difference between x and mc, ||x-mc||, is a measure of how close the final SOM value is to the original input value and is reflected by the quantization error QE. The average QE of all Xi in an image is given by

where N is the number of input vectors x in the image. The final weights of the SOM here are defined by a three dimensional output vector space representing R, G, and B channels. The magnitude as well as the direction of change in any of these from one image to another is reliably reflected by changes in the QE. The spatial location, or coordinates, of each of the model neurons, or domains, placed at different locations on the map, exhibit characteristics that make each one different from all the others. When a new input signal is presented to the map, the models compete and the winner will be the model the features of which most closely resemble those of the input signal. The input signal will thus be classified or grouped in one of models. Each model or domain acts like a separate decoder for the same input, i.e. independently interprets the information carried by a new input. The input is represented as a mathematical vector of the same format as that of the models in the map. Therefore, it is the presence or absence of an active response at a specific map location and not so much the exact input-output signal transformation, or magnitude of response, that generates the interpretation of the input. To obtain the map size needed for a given study, a trial-and-error process is generally implemented [33, 39], which is directly determined by the value of the resulting quantization error;the lower this value, the better the “first guess”.

Materials and Methods

Images

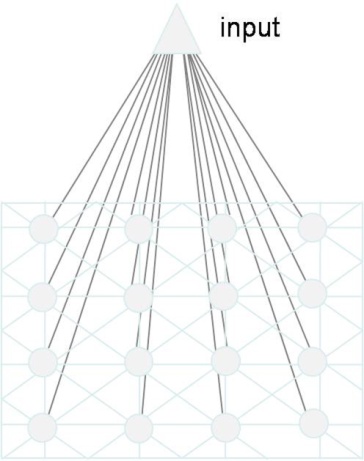

Earth observation satellites such as NASA’s Landsat provide access to data with the highest possible spatial and temporal resolution [2]. For this study, time series of satellite images NASA Landsat images [16] of Las Vegas City Centre and the residential North of Las Vegas were submitted to analysis. The original images 25 images for each geographical ROI were extracted from time-lapse animations of Las Vegas County, Nevada, for a reference time period from 1984 to 2008, as captured by NASA Landsat sensors. [60], an open source media player, was used to generate static images from the time-lapse animations provided. The original images are false-colour, displaying arid desert regions in greenish-brown, building structures in gray levels and healthy vegetation/green spaces in red. Water is represented by black pixels. The 50 original static images can be downloaded from research gate as indicated in the Data Availability Statement. Low-resolution copies of two of the high-resolution 25 images for the residential North from the years 1984 and 2008 are shown in Figure 3 for illustration.

All images were pre-processed [29] to ensure they were identically scaled and aligned within a given series. This was achieved here through StackReg, a software tool for image co-registration specifically designed for scientific multidimensional image processing [61] and the ancillary plugin TurboReg. Both are plugins to ImageJ, an open source image processing software package. ImageJ’s script editor supports various programming languages including python and matlab. The co-registration method exploits an automatic subpixel registration algorithm minimizing the mean square intensity difference between a reference and a test data set based on a coarse to fine iterative strategy performed according to a new variation of the Marquardt-Levenberg algorithm for nonlinear least-square optimization [61]. StackReg is used as a front-end to TurboReg. Several mechanisms are at work to exchange data between these plugins, one of them involving temporary files which are written into the temporary directory that ImageJ defines. The StackReg software is available at http://bigwww.epfl.ch/thevenaz/stackreg/, with a direct link to TurboReg. The last image of the time series was used to anchor the registration. Control for variations in contrast intensity between images of a given series was performed after registration by increasing the image contrast and by removing strictly local variations at different times of image acquisition. For each extracted image, contrast intensity (I) normalization was ensured using

The registered and normalized image taken in 2008, the last year of a time series from each ROI, was used to train the SOM for SOM-QE analysis.

SOM-QE Analysis

Pixel RGB values were used as input stream to a four-by-four SOM with sixteen model neurons (Figure 2). This input dimensionality ensures the processing of fine image detail and permits avoiding errors due to inaccurate feature calculation, which occur frequently with complex images [62]. To yield quantitative criteria for choosing the map size, a trial-and-error process [33, 39] was implemented using the image input data. This process led to observe that map sizes with more than 16 model neurons produced observations where some models ended up empty, which meant that these models did not attract any input towards the end of the training. As a consequence, a four-by-four SOM architecture with 16 models was sufficient to represent all the image data. Neighborhood distance and learning rate were constant at 1.2 and 0.2 respectively. These parameters arise on the basis of the same initial trial-and-error process when testing the quality of the “map’s best first guess” [33-35], which is directly determined by the value of the resulting quantization error; the lower this value, the better the first guess. The models were initialized by randomly picking vectors from the training image, which allows the SOM to work on the original data without any prior assumptions about any level of organization within the data, but requires starting with a wider neighborhood function and a higher learning-rate factor than in procedures where initial values for model vectors are pre-selected. As a consequence, the SOM training here consisted of 1000 iterations for a two-dimensional rectangular map of 4 by 4 nodes capable of creating 16 model observations from the data. The spatial locations, or coordinates, of each of the 16 models or domains, placed at different locations on the map, exhibit characteristics that make each one different from all the others. When a new input signal is presented to the map, the models compete and the winner will be the model the features of which most closely resemble those of the input signal. The input signals are classified or grouped in one of models. Each model or domain acts like a separate decoder for the same input, i.e. independently interprets the information carried by new input. After the training phase, the QE in the trained map output was determined for each of the 25 images of the series. The code for running the SOM is written in Python 3 with numpy 1.24.2, available at https://github.com/SoftwareImpacts/SIMPAC-2023-308 in a special open access collection featuring software that has been verified for computational reproducibility by code ocean: https://codeocean.com/capsule/3541740/tree/v2.

Results

The results from the analyses on the image time series for the two geographical ROI show a general trend towards increase in the QE metric for each ROI between 1984 and 2008. These results are given in Table 1 as a function of the image year and the ROI.

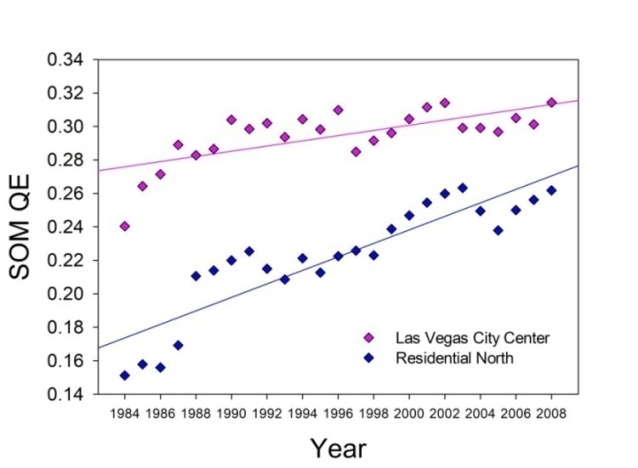

The numerical data were submitted to linear regression analysis to assess the statistical significance of the increase in SOM- QE across the years. The linear fits are shown in Figure 4 for each of the two ROI. As an estimate of the part of variance in the data that is accounted for by the linear trend, or fit, the regression coefficient r2 is computed. It is a direct reflection of the goodness of the fit. The statistical significance of the trend in the data in any given direction, upward or downward, is determined by the probability that the linear adjustment sufficiently differs from zero on the basis of Student’s distribution (t). These results reveal a statistically significant linear trend towards increase in The SOM-QE as a function of time for each geographical ROI. The results from the linear regression analysis with the slopes and intercepts of the fits and their regression coefficients r2 are shown in Table 2. The results from the statistical trend analyses with Student’s t, degrees of freedom (df) for a given comparison and the associated probability (p), limits are given in Table 3. The regression coefficients (Table 2) reveal that the linear fit to the SOM-QE for the images of the residential North is a reasonably good one, while the linear fit to the SOM-QE for Las Vegas City is not. This is consistent with the type of structural change that took place in each of the ROI across the study years. While there was a disruptive reorganization of the City Center, with old casinos and hotel centers disappearing to be replaced or not by new ones over the years, the landscape of what is now the residential North changed in terms of a much smoother and rather progressive, step-by-step development of building and housing agglomerations in these arid regions of the Nevada desert.

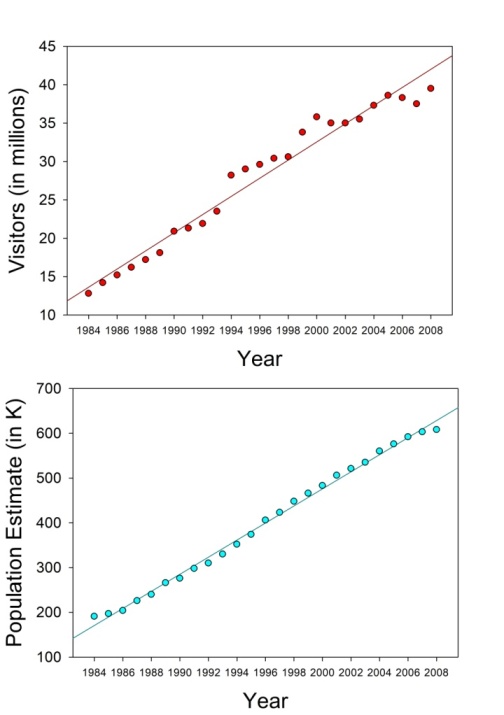

The linear trend statistics (Table 3) reveal a statistically highly significant increase in the SOM-QE across the image years for both ROI. For the same reference time period, the Las Vegas Convention and Visitors Authority (2024), and the Las Vegas Population Review (2024) have provided publically archived data that show a steep increase of human impact across the same years as those from which the satellite images analyzed here were taken. These data are shown in Table 4 in terms of annual population estimates in thousands for Greater Las Vegas, which includes the City and the residential North, and visitors in millions.

These demographic data were also submitted to linear regression and statistical trend analysis. The linear trends are shown graphically in Figure 5. The results reveal statistically significant linear trends towards increase as a function of time for both types of human impact data. The results from the linear regression analysis with the slopes and intercepts of these fits and their regression coefficients r2 are shown in Table 5. The results from the statistical trend analyses with Student’s t, Degrees of Freedom (df) for a given comparison and the associated probability (p), limits are given in Table 6. The regression coefficients r2 in Table 5 reveal that the quality of the linear fits to the human impact data trends in terms of annual visitor increase and population growth across the study years is excellent. The steady increase in population and visitors of Greater Las Vegas is consistent with the restructuration that took place across these years, creating an increasingly larger offer for human activities and work in the entertainment sector on the one hand, and a need for more residential development catering for the needs of people providing their workforce for this expanding industry. The linear trend statistics in Table 6 reveal highly significant growth trends for both demographic variables.

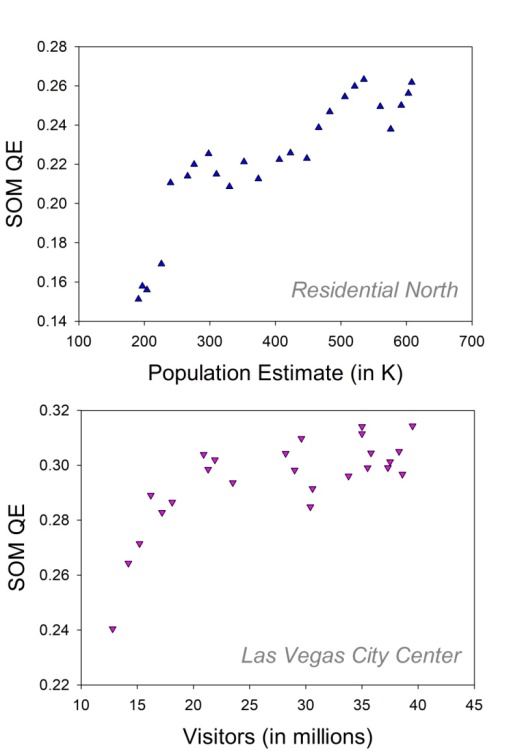

The subsequent analysis is concerned with the correlations between the QE distributions from image analysis and the distributions reflecting human impact data in terms of population and visitor growth over the years. Correlations are useful because, although a direct causality cannot be inferred, they may indicate a predictive relationship between variables. Significance and direction of correlations then can be further exploited for further interpretation of the image data in their wider context. To that effect we computed Pearson's correlation coefficient R, which mathematically determines statistical covariance. The probability p that the covariance of two observables is statistically significant is determined by the magnitude of the Pearson coefficient, which is directly linked to the strength of correlation, while its sign is directly linked to the direction of the covariance (positive or negative) of two variables. This analysis was performed on the paired distributions from the image analysis of the residential North and the population data, and on the paired distributions from the image analysis of Las Vegas City and the yearly visitor estimates. The correlations are shown graphically in Figure 6 for the SOM-QE data from the 25 images of the residential North as a function of the yearly population estimates (top) and the SOMQE data from the 25 images of Las Vegas City as a function of the yearly number of visitors (bottom). The analysis signals statistically significant positive correlations between the paired variables in both cases. The correlation statistics, with Pearson coefficients R for a given comparison and the associated df and probability limits p, are shown in Table 7.

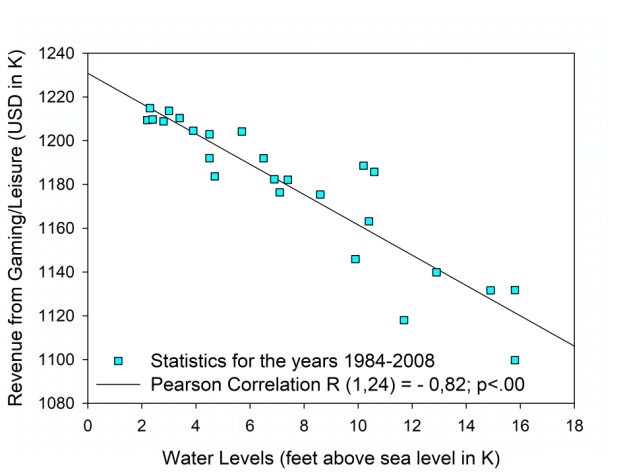

Greater Las Vegas is one of the driest regions in the world [63]. The particular problem space of considerable urban expansion and economic growth in such a region, and their impact on limited natural resources (Figure 1) therein, such as water, is highlighted by further statistics. [64] Show a steep increase in revenue from gaming and leisure activities in these years (1984-2008), while the water level measures for Lake Mead from [20] show supply dwindling away during these same years. This is shown here in Figure 7 in terms of a significant negative correlation between economic growth in terms of a steep increase in revenue and water availability

Discussion

SOM analysis of satellite images of the residential North of Greater Las Vegas and Las Vegas City Center consistently captures the structural changes in each ROI across the study years. These were characterized by a step-by-step reorganization of the City Center, with old casinos and hotel centers disappearing and replaced by more and larger new ones, and the progressive development of housing and infrastructure in desert regions that are now part of the residential North. The significant positive correlations between the QE distributions from the SOM-QE analysis of the satellite images and the statistical distributions on human impacts in terms of population growth and increasing visitor volume across the same years allow for a meaningful image interpretation in a wider sense. The human response to an increasingly larger offer of state-of-the-art entertainment in the City engendered an increasing need for residential development in desert regions to provide housing for an increasing population providing the necessary workforce. At the same time, the water supply to these arid regions dwindled away steeply. Greater Las Vegas being one of the most arid regions of the planet [63], linking the numerical model predictions statistically to numerical human impact data, as shown here, permits anticipating the scale of the effects of urbanization and directly related human impacts on this particular region. Such anticipation allows for prompt planning and implementation of measures for their mitigation, taking fully into account the specific geographic and economic context.

Conclusions

These data and observations illustrate how AI-assisted image analysis may be combined with the analysis of human impact statistics to enable an interpretation of image data in their direct societal context. The approach illustrates an explainable AI method for the rapid analysis of large data input streams by exploiting self-organized competitive learning for fully automated data analysis, in this study here pixel-based satellite imaging data, prior to further scrutiny or human decision making. The method is economic in computational resources and easily implemented, and can be used as a building block within step-by-step approaches to image analysis [7], from pixels to image regions [14, 32]. Current criteria for trustworthy and sustainable AI [65, 66] are satisfied for a wide range of potential applications, with limitations similar to those of any method of automated image data analysis. Prior hypotheses and additional analyses are required to convey meaning to the classification data

Acknowledgement

This work is sponsored by CNRS France and DEKUT Kenya.

Ethical Statement

This study does not contain any studies with human or animal subjects performed by any of the authors.

Conflict of Interest

The authors declare that they have no conflict of interest.

Data Availability Statement

The data that support the findings of this study are available in the paper. The images analyzed here are available publically on Researchgate at the following page: https://www.researchgate.net/publication/377577425_Satell iteImage_DataLVCityResidentialNorthtar.

- Craglia M, Nativi S (2018) Mind the Gap: Big Data versus Interoperability and Reproducibility of Science. In Mathieu P, Aubrecht C (eds.) Earth Observation Open Science and Innovation, 121–41.

- Kotchi SO, Bouchard C, Ludwig A, Rees EE, Brazeau S. (2019) Using Earth observation images to inform risk assessment and mapping of climate change-related infectious diseases. Can Commun Dis Rep, 45: 133-42.

- Furusawa T, Koera T, Siburian R et al. (2023) Timeseries analysis of satellite imagery for detecting vegetation cover changes in Indonesia. Sci Rep, 13: 8437.

- Sudmanns M, Tiede D, Lang S, Bergstedt H, Trost G, Augustin H, Baraldi A, Blaschke T (2019) Big Earth data: disruptive changes in Earth observation data management and analysis? Int J Digit Earth, 13: 832-50

- Schipper ELF, Dubash NK, Mulugetta Y (2021) Climate change research and the search for solutions: rethinking interdisciplinarity. Clim Change, 168: 18.

- Palmer T, Stevens B (2019) The scientific challenge of understanding and estimating climate change. Proc Natl Acad Sci USA, 116: 24390-5.

- Olsavszky V, Dosius M, Vladescu C, Benecke J (2020) Time Series Analysis and Forecastingwith Automated Machine Learning on a National ICD-10 Database. Int J Environ Res Public Health, 17: 4979.

- Ahn D, Yang J, Cha M, Yang H, Kim J, Park S, Han S, Lee E, Lee S, Park S (2023) A human-machine collaborative approach measures economic development using satellite imagery. Nat Commun, 14: 6811.

- Kumar P, Miklavcic SJ (2018) Analytical Study of Colour Spaces for Plant Pixel Detection.Journal of Imaging, 4: 42.

- Metzler AB, Nathvani R, Sharmanska V, Bai W, Muller E, Moulds S, et al. (2023) Phenotyping urban built and natural environments with high-resolution satellite images and unsupervised deep learning. Sci Total Environ, 893:164794

- Dou X, Guo H, Zhang L, Liang D, Zhu Q, Liu X, et al. (2023) Dynamic landscapes and the influence of human activities in the Yellow River Delta wetland region. Sci Total Environ, 899: 166239.

- Luke, TW (2013) Gaming Space: Casinopolitan Globalism from Las Vegas to Macau. In StegerM, McNevin A. (eds.) Global Ideologies and Urban Landscapes, Routledge, pp. 77-87.

- Camacho C, Palacios S, Sáez P, Sánchez S, Potti J (2014) Human-induced changes in landscape configuration influence individual movement routines: lessons from a versatile, highly mobile species. PLoS One, 9: e104974.

- Orheim O, Lucchitta B (1990) Investigating Climate Change by Digital Analysis of Blue Ice Extent on Satellite Images of Antarctica. Annals of Glaciology, 14: 211-5.

- Bhaskar R, Frank C, Hoyer GK, Naess P, Parker J (2010) Interdisciplinarity and climate change: Transforming knowledge and practice for our global futures, Routledge.

- NASA/Goddard Space Flight Center Landsat images from USGS Earth Explorer ID 10721 (2012) Available online (last accessed 16/01/2024): http://svs.gsfc.nasa.gov/10721

- Las Vegas Convention and Visitors Authority (2024) available online (last accessed on 16/01/2024): https://www.lvcva.com/

- Las Vegas Population Review (2024) Available online (last accessed on 16/01/2024): https://worldpopulation review.com/world-cities/las-vegas-population

- Dow C, Ahmad S, Stave K, Gerrity D (2019) Evaluating the sustainability of indirect potable reuse and direct potable reuse: a southern Nevada case study. Water Sci, 1: e1153.

- US Department of Interior Bureau of Reclamation, Hoover Dam Control Room Statistics (2023). Available online (last accessed on 16/01/2024). https://www.usbr.gov/lc /region/g4000/hourly/mead-elv.html

- Tan R, Gao L, Khan N, Guan L (2022) Interpretable Artificial Intelligence through Locality Guided Neural Networks. Neural Netw, 155: 58-73.

- Saint James Aquino Y (2023) Making decisions: Bias in artificial intelligence and data‑driven diagnostic tools. Aust J Gen Pract, 52: 439-42.

- Sylolypavan A, Sleeman D, Wu H, Sim M (2023) The impact of inconsistent human annotations on AI driven clinical decision making. NPJ Digit Med, 6: 26.

- Brown DL (2017) Bias in image analysis and its solution: Unbiased stereology. J Toxicol Pathol, 30: 183-91.

- Baxter JSH, Jannin P (2022) Bias in machine learning for computer-assisted surgery and medical image processing. Computer Assisted Surgery, 27: 1-3.

- Mac Namee B, Cunningham P, Byrne S, Corrigan OI (2002) The problem of bias in training data in regression problems in medical decision support. Artif Intell Med, 24: 51-70.

- Daneshjou R, Smith MP, Sun MD, Rotemberg V, Zou J (2021) Lack of Transparency and Potential Bias in Artificial Intelligence Data Sets and Algorithms: A Scoping Review. JAMA Dermatol, 157: 1362-9.

- Gichoya JW, Thomas K, Celi LA, Safdar N, Banerjee I, Banja JD, et al. (2023) AI pitfalls and what not to do: mitigating bias in AI. British Journal of Radiology (Bellham), 96: 20230023.

- Wieland M, Pittore M (2014) Performance Evaluation of Machine Learning Algorithms for Urban Pattern Recognition from Multi-spectral Satellite Images. Remote Sensing, 6: 2912–39.

- Nazer LH, Zatarah R, Waldrip S, Ke JXC, Moukheiber M, Khanna AK, et al. (2023) Bias in artificial intelligence algorithms and recommendations for mitigation. PLOS Digit Health, 2: e0000278.

- Drukker K, Chen W, Gichoya J, Gruszauskas N, Kalpathy-Cramer J, Koyejo S, et al. (2023) Toward fairness in artificial intelligence for medical image analysis: identification and mitigation of potential biases in the roadmap from data collection to model deployment. J Med Imaging (Bellham),10: 061104.

- Wandeto J, Dresp-Langley B (2023) SOM-QE ANALYSIS: a biologically inspired technique to Detect and track meaningful changes within image regions. Software Impacts, 17: 100568.

- Kohonen T (1998) The self-organizing map. Neurocomputing, 21: 1–6.

- Kohonen T (2001) Self-Organizing Maps. Available online (last accessed on 16/01/2024): http://link.springer.com /10.1007/978-3-642-56927-2

- Kohonen T (2014) MATLAB Implementations and Applications of the Self-Organizing Map. Unigrafia Oy, Helsinki, Finland.

- Carpenter, GA (1997) Distributed Learning, Recognition, and Prediction by ART and ARTMAP Neural Networks. Neural Networks, 10: 1473-94.

- Yang JF, Chen CM (2000) Winner-take-all neural networks using the highest threshold. IEEE Trans Neural Netw, 11: 194-9.

- Chen Y (2017) Mechanisms of Winner-Take-All and Group Selection in Neuronal Spiking Networks. Front Comput Neurosci, 11: 20.

- de Bodt E, Cottrell M, Verleysen M (2002) Statistical tools to assess the reliability of self-organizing maps. Neural Networks, 15: 967-78.

- Castagnetti A, Pegatoquet A, Miramond B (2023) Trainable quantization for Speedy Spiking Neural Networks. Front Neurosci, 17: 1154241.

- Wandeto J, Dresp –Langley B (2019a) Ultrafast automatic classification of SEM image sets showing CD4 cells with varying extent of HIV virion infection. 7ièmes Journées de la Fédération de Médecine Translationnelle de Strasbourg, May 25-26, Strasbourg, France.

- Wandeto J, Dresp-Langley B (2019b) The quantization error in a self-organizing map as a contrast and colour specific indicator of single-pixel change in large random patterns. Neural Networks Special Issue in Honor of the 80th Birthday of Stephen Grossberg, 120: 116-28.

- Wandeto J, Dresp-Langley B (2019c). The quantization error in a self-organizing map as a contrast and colour specific indicator of single-pixel change in large random patterns. Neural Networks, 119: 273-85.

- Zhang J (1991) Dynamics and Formation of Self-Organizing Maps. Neural Comput, 3: 54-66.

- Grossberg, S (1993). Self-organizing neural networks for stable control of autonomous behavior in a changing world. In Taylor, JG (ed.) Mathematical Approaches to Neural Network, Elsevier Science Publishers, Amsterdam, The Netherlands, 139–97.

- Iigaya K, Fusi S (2013) Dynamical regimes in neural network models of matching behavior. Neural Comput, 25: 3093-112.

- Eglen SJ, Gjorgjieva J (2009) Self-organization in the developing nervous system: theoretical models. HFSP J, 3: 176-85.

- Erwin E, Obermayer K, Schulten K (1992) Self-organizing maps: ordering, convergence properties and energy functions. Biol Cybern, 67: 47-55.

- Ota K, Aoki T, Kurata K, Aoyagi T (2011) Asymmetric neighborhood functions accelerate ordering process of self- -organizing maps. Phys Rev E Stat Nonlin Soft Matter Phys, 83: 021903.

- Hebb D (1949) The Organization of Behaviour, John Wiley & Sons, Hoboken, NJ, USA.

- Buonamano D, Merzenich M (1998) Cortical plasticity: from synapses to maps. Annu Rev Neurosci, 21: 149–56.

- Wandeto JM, Dresp-Langley B, Nyongesa HKO (2018) Vision-inspired automatic detection of water-level changes in satellite images: the example of Lake Mead, 41st European Conference on Visual Perception, Trieste Italy. Perception, 47, ECVP Abstracts, 47.

- Wandeto J, Nyongesa H, Dresp-Langley, B (2017). Detection of smallest changes in complex imagescomparing self-organizing map to expert performance. Perception, 46(ECVP Abstract Supplement).

- Wandeto J, Nyongesa H, Rémond Y, Dresp-Langley B (2017). Detection of small changes in medical and random-- dot images comparing self-organizing map performance to human detection. Informatics in Medicine Unlocked, 7: 39-45.

- Dresp-Langley B, Wandeto J (2020) Pixel precise unsupervised detection of viral particle proliferation in cellular imaging data. Informatics in Medicine Unlocked, 20: 100433.

- Dresp-Langley B, Wandeto J (2021b). Unsupervised classification of cell-imaging data using the quantization error in a self-organizing map. In: Arabnia HR, Ferens K, de la Fuente D, Kozerenko EB, Olivas Varela, J.A., Tinetti, F.G. (eds.), Advances in Artificial Intelligence and Applied Cognitive ComputingTransactions on Computational Science and Computational Intelligence, 201-9.

- Dresp-Langley B, Wandeto J (2021a) Human symmetry uncertainty detected by a self- organizing neural network map. Symmetry, 13: 299.

- Dresp-Langley B, Liu R, Wandeto J (2021) Surgical task expertise detected by a self-organizing neural network map. 10.48550/arXiv.2106.08995

- Liu R, Wandeto J, Nageotte F, Zanne P, de Mathelin M, Dresp-Langley B (2023) Spatiotemporal modeling of grip forces captures proficiency in manual robot control. Bioengineering, 10: 59.

- The VLC media player source code (2023) Available online (last accessed on 16/01/2024): https://www.videolan.org/vlc/download-sources.html

- Thévenaz P, Ruttimann UE, Unser MA (1998) Pyramid Approach to Subpixel Registration Based on Intensity. IEEE Transactions on Image Processing, 7: 27–41.

- Schneider CA, Rasband WS, Eliceiri KW (2012). From NIH Image to ImageJ: 25 years of image analysis. Nature Methods, 9: 671.

- Frumkin H, Das MB, Negev M, Rogers BC, Bertollini R, Dora C, Desai S (2020). Protecting health in dry cities: considerations for policy makers. BMJ, 371: m2936.

- University of Nevada Center for Gaming Research Annual Statistics (2023) available online (last accessed on 21/01/2024): https://gaming.library.unlv.edu/reports /NV_departments_hi/storic.pdf/

- High-Level Expert Group on Artificial Intelligence HLEGAI (2020) Assessment List for Trustworthy Artificial Intelligence ALTAI, The European Commission. Available online (last accessed on 16/01/2024): https://ec.europa.eu/news room/dae/document.cfm?doc_id=68342

- Radclyffe C, Ribeiro M, Wortham RH (2023) The assessment list for trustworthy artificial intelligence: A review and recommendations. Front Artif Intell, 6: 1020592.

- Ritter H (1990) Self-organizing maps for internal representations. Psychol Res, 52:128-36.

- Jung J, Lee H, Jung H, Kim H. (2023). Essential properties and explanation effectiveness ofexplainable artificial intelligence in healthcare: A systematic review. Heliyon, 9: e16110.

Tables at a glance

Figures at a glance